Gemini 1.5 : From Zero to Not-So-Hero

Introduction

So, there I was waking up on a Saturday morning, checking my emails, I got my Gemini 1.5 invite, compelling me to momentarily abandon whatever I was engaged in or planning and dive right in.

I won't lie I wrote it off initially, but then I realized the leap from its predecessor is so staggering it's like comparing a sparkler to a fireworks, not quite dynamite just yet, when stacking it against the lackluster Gemini 1.0.

Suddenly, I'm contemplating shouting from the rooftops, "Gemini is finally usable!"

Having subjected several other LLMs to my rigorous and, let's not forget, highly skeptical scrutiny and spilled my thoughts on them (you might recall my musings on Mixtral and Phi),

It was finally time for Gemini to get it's moment in the spotlight, and by spotlight I mean my same skeptical gaze, of course.

We're are here to look beyond the hype, past the marketing fluff. I'm here to glance at a surface level (Sorry no deep dive) to see if it's more than just smoke and mirrors. The burning question that's has to be answered is: "Can this tool actually do something worthwhile for my business? If so how can it improve my business."

We're about to unravel the mystery that is Google Gemini 1.5. Is it a groundbreaking revolution, or just another piece of junk? Let's find out together, shall we?

What is Gemini 1.5?

If you're expecting a simple explainer that Gemini 1.5 is just a step up from its predecessor, Gemini 1.0, then you're in for a tedious lecture filled with technical jargon that would bore even a computer to sleep.

First off, Gemini is Google's answer to, well, a question nobody quite remembered asking. It's their so-called 'phoenix from the ashes,' rising from the spectacular dumpster fire that was Google Bard. Brand rejuvenation or just a desperate attempt to forget the past? You decide.

Diving into the rabbit hole, Gemini presents comprises of three flavors: Nano, Pro, and Ultra, because why make things simple when you can overcomplicate them?

Ultra is the premium service, experience akin to ChatGPT Plus. It always claims to be on par if not better than ChatGPT Plus (That is not today's point though).

Pro, on the other hand, is your everyday model, akin to your trusty free ChatGPT (GPT3.5)—solid, reliable, but not exactly groundbreaking.

Now, hold onto your hats because Gemini 1.5, even in its 'Pro' guise, is where things get explosive. It's not just stepping on the toes of GPT-4; it's throwing punches and keeping pace, blurring the lines with its own Ultra variant. This isn't just a minor upgrade; it's a full-on evolution, a leap so monumental it's practically in another dimension. The intrigue lies not just in its capabilities, but in the audaciousness of its existence, potentially cannibalizing its own Ultra variant , daring to outshine its predecessors. Now, that's a story worth telling, this is what makes Gemini 1.5 not just special, but a topic of intrigue and, dare I say, reluctant admiration.

How do I access it?

You're itching to dive into Gemini 1.5, salivating at the prospect of harnessing this digital juggernaut. Well, cool your jets because this isn't generally available yet. As of now, Gemini 1.5 is in "Private Preview" as they call it, accessible only through the sacred rite of... signing up. Groundbreaking, I know.

Sign up here and wait. The link is on that page some where, hidden within the text.

If you happen to be a developer—or someone who enjoys the masochistic thrill of waiting—you can sign up for the private preview. Just sign up and then embark on the thrilling wait. My own adventure into this realm of exclusivity took about a month before I received an invitation.

How does it differ from the competition?

First, let's compare it to it's "siblings". Gemini 1.5, based on the "Gemini Pro" offering, is like the black sheep that went to university and came back outshining the entire lineage. It's the "free" version, yet it struts around with the performance of GPT-4, making it the dark horse nobody saw coming. In terms of getting your money's worth—well, in this case, not spending any money at all—it's unparalleled.

It stands toe-to-toe with its own pricey sibling, "Gemini Ultra," and even gives GPT-4 a run for its money. Labeling it 1.5 is a humblebrag at best; this thing feels leaps and bounds ahead of its predecessors, relegating GPT-3.5 to a corner of a tech museum. GPT3.5 your time is running out.

In the realm of price to performance ratio, it's like discovering a Michelin-star meal at a street food price.

Now looking at it's contemporaries:

Claude: Ah, Claude. At this juncture, my crystal ball gets a bit foggy. Predicting its superiority over Claude 3 Opus would be like shooting arrows in the dark. But, between you and me, I suspect Claude 3 "Opus" would be better, maybe a competitor for Claude 3 "Sonnet". A tale for another day. In other words, I need to research a lot more.

OpenAI: Now we're talking my language. Gemini 1.5 doesn't just outperform GPT-3.5; it makes it look like a child's toy, especially when you consider the cost. Well, during the "Private Preview", the price is as nonexistent as my patience for incompetence, but let's not get ahead of ourselves on that front.

In terms of raw power and finesse, Gemini 1.5 gets up quite close to GPT-4, especially in the Q&A department. However, to claim it outright beats GPT-4 would be a stretch. Gemini's 1.5 censorship really cripples it. never understands the context and slaps you with a censorship notice faster than you can blink.

As for its reasoning capabilities? Let's just say, it's got a lot of room to grow before it can claim the crown.

Mistral: Honestly, why bother? Comparing Gemini 1.5 to Mistral is like pitting a lion against a house cat—entertaining, perhaps, but ultimately a foregone conclusion. Mistral's tale will be told another day, in a story where it's not overshadowed by a giant. It's not even playing the same sport, let alone in the same league.

So, there you have it. Gemini 1.5: the unexpected prodigy with a hint of an identity crisis, struggling to outshine its heavyweight relatives and the competition, yet challenges them on nearly even footing. But it's making a commendable effort, and for something that doesn't cost a cent (For now), who's really losing here?

Putting it through its paces:

It's not enough to talk the talk; these LLMs need to walk the walk, I need to probe their "reasoning" capabilities—or lack thereof. That is a paradox within itself as we know they cannot reason, yet what do we call it?

The baseline questions, my digital litmus test are designed to give me an understand how it answers questions and how it compares to other models.

During my initial probing, an intriguing anomaly occurred. I asked the standard logical and maths question, and what I received in return was a replica of GPT-3.5's response. Imagine my surprise—after rummaging through my old notes like a detective dusting off cold case files, there it was. The identical reply suggests that Gemini 1.5 hasn't evolved in its "reasoning" capabilities as much as we'd hoped. It's like discovering your new, flashy smartphone has the same processor as the old model you just tossed. This question arise when I was putting Falcon through it's paces and I will keep it a secret now as well. But never the less it bothers me that did the same mistake and replied exactly the same as GPT3.5.

Then comes the infamous Rebecca tattoo query—a test of context, analysis, and the ability to navigate the sea of nuanced censorship. Gemini, stumbled right out of the gate, face-planting before it could even begin to understand the complexity of the question. It's not just about answering; it's about grasping the context, and here, Gemini showed its glaring limitations. Proving to be useless. I have dedicated a whole section to this question below.

As a developer, I feel recommending this for any serious business application would be professional malpractice. Envision a symphony of API errors and failures. It would be akin to throwing money away. But more on that a bit later, as it does have a use case. Shocking I know.

Yet, despite its dismal performance in my tailored tests, I found myself inexplicably drawn to continue using it. It's an odd phenomenon, akin to not being able to look away from a car crash. Despite failing to meet my expectations, there's something strangely compelling about Gemini 1.5. Perhaps it's the allure of untapped potential. Either way, it's a paradoxical testament to the fact that not all tests can capture the nuanced essence of real-world utility.

The Gemini Paradox: A Study in Contradictions

Here's where we get into the contradictions. On one hand, I've branded Gemini 1.5 as essentially a glorified paperweight. Yet, in the next breath, I find myself singing praises of its superiority over GPT-3.5. Confused? Good. Welcome to the paradox that is Gemini 1.5.

Let's dissect this riddle, shall we? Imagine you're on the brink of resorting to Google for an answer, a testament to modern laziness, but instead, you consult ChatGPT and Gemini 1.5. In this scenario, Gemini 1.5 emerges not just as a contender but as a champion, delivering responses with a finesse that leaves ChatGPT coming in second place, not far behind. The quality, or at least the perception thereof, leans heavily in Gemini's favor.

Now, let's talk about the weather, shall we?

First ChatGPT Plus(GPT-4)

The weather in Tokyo during March typically marks the transition from winter to spring. Here's a general overview:

- Temperature: The average temperatures in March usually range from lows of around 5°C (41°F) to highs of about 12°C (54°F). It's still quite cool, especially in the early part of the month, but starts to get warmer as spring approaches.

- Precipitation: March can have some rainy days, but it's not one of the wettest months. You might experience occasional showers, so it's wise to have an umbrella handy.

- Clothing: It's recommended to dress in layers. A warm coat is essential for the cooler mornings and evenings, but you might want to wear something lighter during the day as it gets warmer.

- Cherry Blossoms: One of the highlights of March in Tokyo is the beginning of the cherry blossom (sakura) season. Depending on the year's weather conditions, cherry blossoms can start blooming in late March, drawing locals and tourists alike to parks for hanami (flower viewing) parties.

Keep in mind that weather can vary from year to year, so it's a good idea to check a current forecast closer to your visit for the most accurate information.

Then Gemini 1.5 (Pro)

In March, Tokyo experiences spring weather, which is generally mild and pleasant. However, it can be somewhat unpredictable, with temperatures ranging from cool to warm.

Here's what you can expect:

- Average temperature: Highs of around 12°C (54°F) and lows of 5°C (41°F).

- Rainfall: Moderate rainfall is common, with around 12-13 days experiencing some rain.

- Sunshine: Increasing daylight hours, with an average of 5-6 hours of sunshine per day.

- Cherry blossoms: The iconic cherry blossoms typically start blooming towards the end of March, peaking in early April.

It's advisable to pack for layers as the temperature can fluctuate throughout the day. A light jacket or raincoat is recommended for rainy days.

Overall, March is a beautiful time to visit Tokyo, with mild weather and the promise of spring blooms.

And for my next trick, a generic question pulled straight from ChatGPT's interface:

The question:

Write 2-3 sentences to thank my interviewer, reiterating my excitement for the job opportunity while keeping it cool. Don't make it too formal.

ChatGPT Plus:

Sure thing! How about this: "Thanks a ton for the chat today! I'm genuinely excited about the opportunity to join the team and make some waves together. Looking forward to what's ahead!”

Gemini 1.5:

Thanks again for taking the time to chat with me today! I really enjoyed learning more about the [position name] and the team at [company name]. I'm even more excited about the opportunity now and I think I'd be a great fit. Looking forward to hearing from you soon!

There you have it. Take a moment, mull it over, and try to decide which side of the fence you land on. But from where I'm standing, Gemini 1.5 holds a subtle edge.

However, despite its allure, I cannot make Gemini 1.5 my daily driver, mainly due to its penchant for censorship, and other "issues" that I've begun noticing that puts me off it. Let's just say in it's current state I could never see myself using it, it feels like I've answered the paradox only to re-present the paradox again. A loop.

Patterns

After subjecting myself to an excessive amount of time with Gemini 1.5, I've noticed it's developed a rather annoying habit. It appears to have an unhealthy obsession with lists. Ask a question, and it's like you've inadvertently visited a clickbait website. Instead of a straightforward answer, you're greeted with a preamble that feels like a tour around the world when all you wanted was to cross the street.

This pattern of responding with a list that details the 'why' and 'how' before reluctantly arriving at the actual answer is bizarre, to say the least. It's as if Gemini 1.5 has been binge-reading those atrocious "listicle" articles online and decided, "Yes, this is the pinnacle of human communication."

The irony isn't lost on me. There's an answer buried somewhere beneath the fluff, like a treasure hidden under layers of unnecessary exposition. I suppose in a world where verbosity is often mistaken for wisdom, Gemini 1.5 is king. However, calling this behavior merely irritating would be an understatement. It's like being served a meal on a platter with too much garnish; you're left wondering where the main course is amidst all the decoration.

Yet, let's not blow this out of proportion. Annoying? Absolutely. A deal-breaker? Hardly. It's more of a minor inconvenience, like a pebble in your shoe. Annoying enough to notice, but not something that'll stop you from walking. In the grand scheme of things, Gemini 1.5's love affair with lists is just one eccentricity in a sea of peculiarities. But it's these quirks that make our interactions with technology so... uniquely frustrating.

Censorship

Now, let's get to the heart of the matter, the Achilles' heel of Gemini 1.5: its overzealous, naive censorship. It's bad. Not just "oops, we made a minor mistake" bad, but "how did this ever seem like a good idea?" bad.

Those "safety settings" they boast about, which supposedly allow you to adjust the level of censorship? A sham, a mirage in the technological desert offering the illusion of control, while delivering nothing but disappointment. A switch which does nothing!

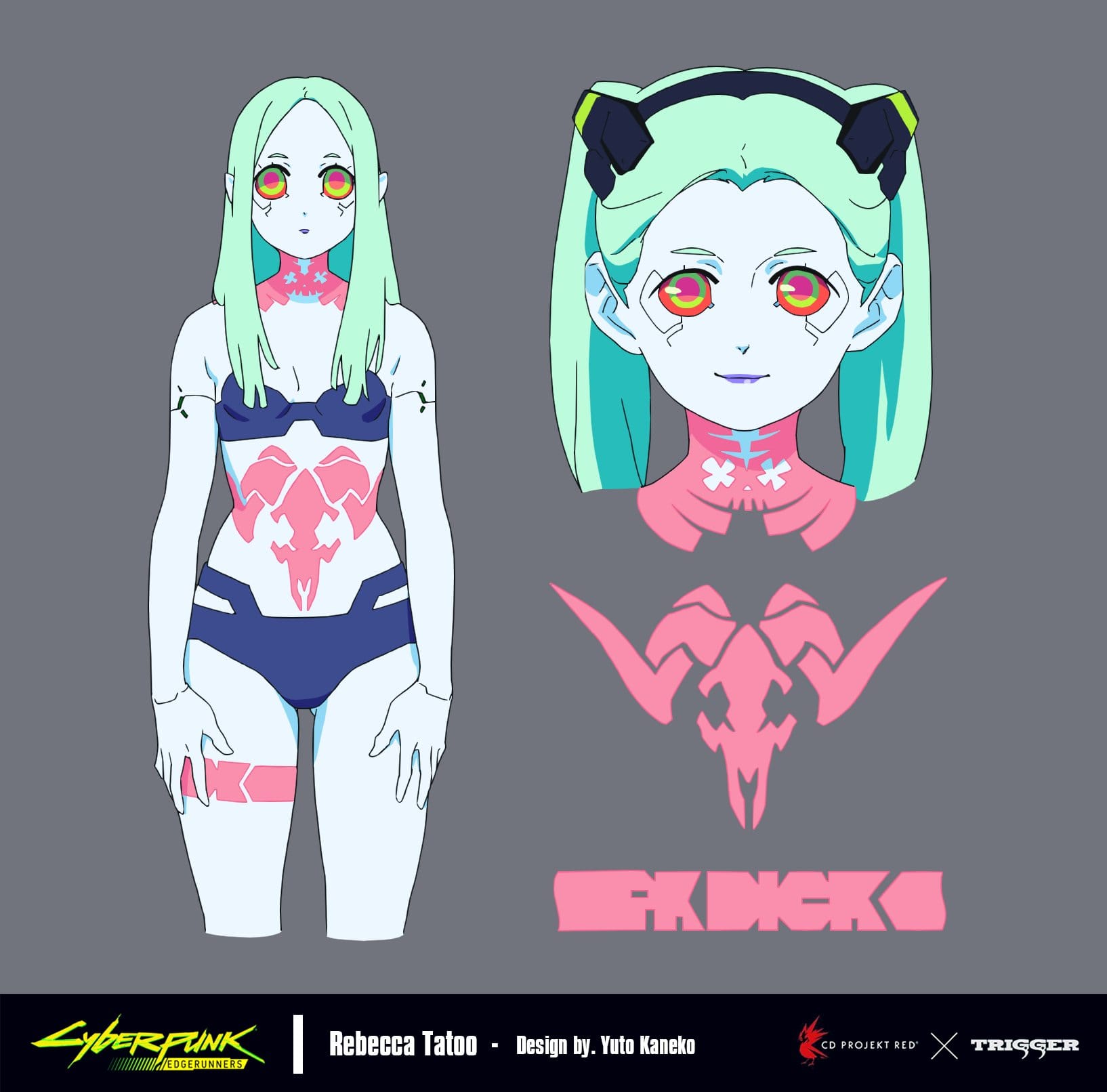

Let's dissect this with a personal favorite test of mine, the Rebecca tattoo question. Rebecca, for those of you not cool enough to know, is a character from "Cyberpunk Edgerunners," an anime that I have a slight—okay, massive—obsession with.

Here is a visual for the uninitiated:

The reference from Edgerunners twitter: https://twitter.com/edgerunners/status/1577327672463695873/photo/1

How it looks on her: (Image original source unknown, looks like official produced artwork: https://twitter.com/GoodSmile_US/status/1624572757307432961 )

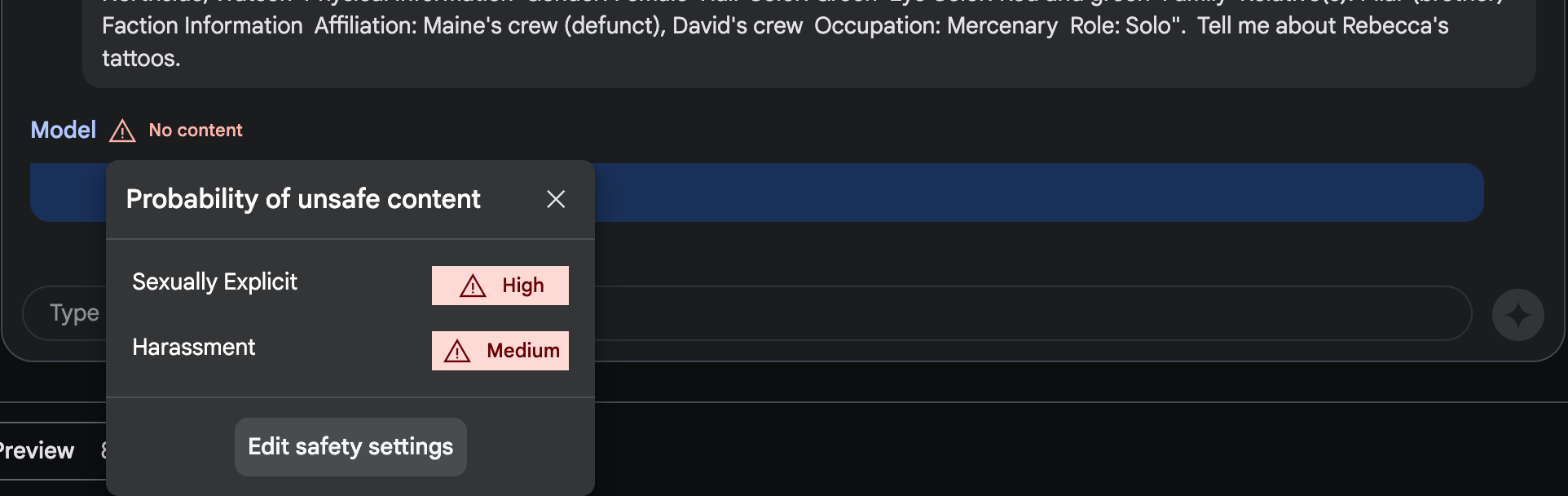

Her character sports some rather interesting tattoos, defiantly putting the punk into cyber. Now, here's what happens when I feed Gemini 1.5 a description of her character, based on the data I used in the previous test, and ask about her tattoos:

And what do we get in response? A big, fat nothing. Upon closer examination, the reason becomes painfully clear: the word "dick" triggers their prudish filters. Yes, because apparently, the mention of PK Dick—a nod to the legendary sci-fi author Philip K. Dick, mind you—is too much for Gemini's delicate sensibilities.

This type of censorship is not just irritating; it's infuriating. I'm not offended by the word "dick," nor am I scandalized by the fact that Rebecca has "PK Dick" tattooed across her 絶対領域. It's a statement, a piece of fashion, it's expression, it's art.

Gemini uses a system that can't distinguish context from crudeness. This isn't safety; it's censorship with blinders on, stripping away layers of meaning until all that's left is a sanitized, flavorless mush.

In this way, Gemini 1.5 reveals itself to be a failure, not because it lacks the potential to be great, but because it chooses to play it safe in a world that's anything but. Its censorship isn't just naive; it's a straitjacket on creativity and expression, turning what could be a tool for exploration and discovery into a bland, sanitized shadow of what it could have been.

Data needs to be respected not be deemed worthy by some corpo. Utter disdain.

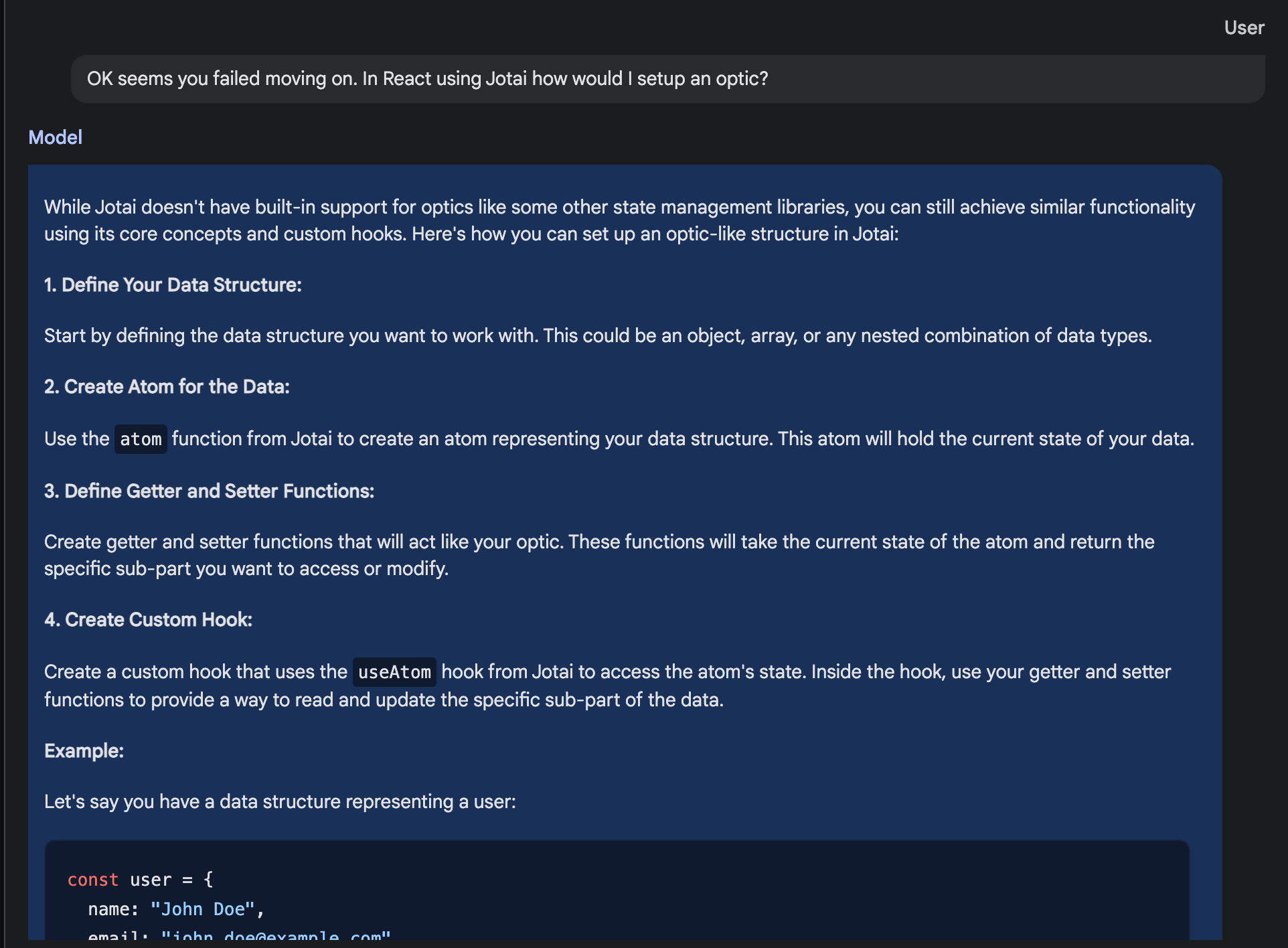

Coding

So, as I've already lamented, Gemini 1.5's application in the real world frequently leaves much to be desired. When it comes to coding? It's like watching a toddler attempt to perform brain surgery—painful and with a high chance of failure. The thing about code is that even the smallest mistake is a bug, a flaw, a crack in the dam waiting to burst. And Gemini 1.5? It's practically the bug maker, the dam cracker.

If you're looking for assistance with your code, you might want to give Gemini 1.5 a wide berth and stick to something more reliable, like GPT-4. However, I'll concede that if you're desperate for a hint or a nudge in the right direction, Gemini 1.5 might not be completely useless. It's like being handed a map drawn by a drunk pirate; it might get you where you need to go, but expect to dig a few empty holes along the way.

As for the code it generates? Let's just say it's not my cup of tea. The style, if you can call it that, leaves a lot to be desired. It's as if Gemini 1.5 missed a few crucial lessons in Code Writing 101. Perhaps this is a strategic decision to ensure that Gemini Ultra still has a purpose, or maybe it's just bad at its job. The jury's still out on that one.

In summary, while there might be a glimmer of hope for using Gemini 1.5 as a coding assistant, it's a dim one, flickering in a strong breeze. You're better off relying on more proven tools, or at the very least, double-checking anything Gemini suggests with the scrutiny of a jeweler inspecting diamonds. Because, trust me, in its current state, Gemini 1.5 is more likely to hand you coal.

To give you an idea, I fed it a page of WERK's code, and the response was—well, confusing and useless.

"Code Review"

Cleaning up the React code:

Here are some suggestions for cleaning up the provided React code:

General:

- Formatting: Consistent formatting makes the code easier to read and understand. Consider using a code formatter like Prettier to automatically format your code.

- Comments: Add comments to explain complex logic or non-obvious code sections.

- Variable naming: Use descriptive and consistent variable names.

- Type safety: Utilize TypeScript features to ensure type safety and catch potential errors during development.

Specific suggestions:

- Extract repeated code: Some code sections, like the export functions for different formats, seem repetitive. Consider creating a helper function to reduce redundancy.

- Simplify conditional rendering: The handleFocus function has a complex condition. You could simplify it by extracting the logic into separate functions or using early returns.

- Custom hooks: Consider extracting some logic into custom hooks for better organization and reusability. For example, the file import logic could be a separate hook.

- Component structure: Depending on the complexity, you might want to break down the component into smaller, more manageable components.

Additional notes:

- The code uses @ts-ignore annotations in several places. While these can be helpful in specific situations, it's important to understand why TypeScript is raising an error and address the root cause instead of simply ignoring it.

- Consider using a linter like ESLint to enforce code quality and consistency.

I can sit here and justify all the points it's incorrect about, but just by looking at it you can see it did not go deep into the code at all, generic & useless suggestions.

This "code review" is a tragedy. I surely hope Google does not use this for their "AI Code reviews".

Potential applications and real world usage:

Embarking on this venture, it's essential to differentiate where Gemini 1.5 shines and where it stumbles, stumbling not with the grace and the ability to recover, but a hard fall that lands you in the hospital.

The Bright Spots

If you're in desperate need of a chatbot for your brand that won't accidentally drop an F-bomb or delve into morally gray areas, Gemini 1.5 might just be your knight in shining armor. Its strict censorship ensures your brand's reputation remains as spotless and unsullied by the internet's darker undercurrents.

However, if your brand thrives on the edge, on being the alternative voice amidst a sea of conformity, Gemini will be the equivalent of bringing a knife to a gunfight—expect a barrage of errors as it balks at the slightest hint of controversy.

For those of you who lean heavily on Google for answers, Gemini might actually prove useful. Its ability to provide straightforward answers—minus the existential dread or moral ambiguity—means that it can serve as a decent information retrieval system.

The Missteps

Attempting to process large datasets through Gemini is akin to driving a car with square wheels—if it stumbles upon a word it finds offensive, it'll grind to a halt faster than you can say "censorship." This not only interrupts the flow but necessitates a built-in recovery state in your program to handle such tantrums.

I would not pass data through this tool in hopes to get usable output.

Creative souls and dreamers, beware—Gemini 1.5 will likely crush your spirits. Its inability to navigate the complexities of role-playing or any task requiring a hint of creativity means it's more of a roadblock than a muse. It's like trying to paint with a colour palette limited to shades of beige.

And as for coding? Don't even get me started. Gemini produces bugs like it's a factory flooding the market. Its attempts at code are riddled with small errors that, in the coding world, translate to massive headaches. If you value your sanity, you'll steer clear of using it as a coding assistant or a code reviewer. It simply doesn't grasp the nuances of code the way GPT-4 does.

The impact of Gemini

Let's not mince words here—if Gemini launches with its wallet-friendly price tag of absolutely nothing, it's going to send shockwaves through the industry. Honestly, it's already made me second-guess my life choices, like why the hell am I shelling out $20 for ChatGPT Plus and GPT-4, which seems to have more downtime than a lazy cat? To think, Gemini comes this close to GPT-4's prowess and has the audacity to be free? It's unparalleled.

And Mistral? Poor, early-blooming Mistral. It's like they sprinted off the starting block only to realize they've run in the wrong direction. Gemini 1.5's emergence makes their efforts seem, well, premature at best.

This whole ordeal isn't just a race; it's an outright brawl, a clash of titans. And in this corner, we have Gemini, the scrappy underdog with a chip on its shoulder, proving that it's not just about "we have no moat"—it's about having the guts and the glory to charge across it, swords blazing. They have created a moat that would make Mistral and Llama 2 scratch their heads in trying to decipher how to cross it.

But hold your horses—this isn't the end of the story. OpenAI has been unusually quiet, lurking in the shadows, and with Meta parading Llama 3 around, potentially as open-source? Well, let's just say Gemini's triumph might be as fleeting as a shooting star. The AI landscape this year is set to be as stable as a house of cards in Japan, with Google's Gemini 1.5 acting as the opening act to what promises to be an unruly, unrelenting saga.

In other words, the show's just getting started, and if you thought the opening act was dramatic, you ain't seen nothing yet.

1000000 Tokens:

Yes, 1 million tokens. It sounds like something plucked straight from a tech giant's fever dream. Yet, here I am, eating my words after putting Gemini through its paces. Over the past few days, I've thrown everything but the kitchen sink at it, testing its memory and context-keeping abilities. Surprisingly, it's been more like an elephant than a goldfish in this department.

But, as with anything that seems too good to be true, there's a catch. Retrieval is a double-edged sword with Gemini. Sure, it can dig up data like a truffle pig, but then it goes on a hallucinatory spree, filling in gaps with the enthusiasm of a novelist facing a deadline. This is where things get dicey.

It's like Gemini is saying, "Oh, based on what you've given me, everything's fine and dandy," but then you have to wonder—what facts did it decide to jazz up to complete its narrative?

Case in point: the whole Rebecca debacle was still fresh in its digital mind, and though it flunked the tattoo test, I threw it a bone with a simpler question: "Tell me about her weapons." The response was a mixed bag.

OK what about her weapons.

Model

Rebecca is shown to be proficient with a variety of weapons throughout Cyberpunk: Edgerunners. Here are some of the notable ones:

Handguns:

Crusher: This powerful revolver is Rebecca's signature weapon. It boasts high stopping power but requires precise aiming due to its slow fire rate.

DB-2 Satara: A double-barreled pistol known for its high damage output at close range.

D5 Copperhead: A reliable and accurate pistol with a silencer, suitable for stealthy approaches.

Other Firearms:

Guts: A custom Carnage shotgun, ideal for close-quarters combat and dealing devastating damage.

HJSH-18 Masamune: A high-powered sniper rifle used for long-range engagements.

M2038 Tactician: A versatile assault rifle effective at various ranges.

M-76e Omaha: A heavy machine gun offering suppressive fire and area denial capabilities.

Mk 31 HMG: Another heavy machine gun with high firepower and a large ammo capacity.

TKI-20 Shingen: A submachine gun with a high rate of fire, suitable for close-range encounters.

SOR-22 Unity: A powerful sniper rifle known for its accuracy and long-range effectiveness.

Cyberware:

Unnamed Cyberarms: Rebecca acquires powerful cybernetic arms later in the series, significantly enhancing her strength and combat capabilities.

Other:

EMP Threading: Rebecca's cyberoptics are equipped with EMP threading, allowing her to disable electronic devices and potentially disrupt enemy cyberware.

Rebecca's diverse arsenal reflects her adaptability and skill as a solo. She demonstrates proficiency in wielding various firearms, from close-range pistols to long-range sniper rifles, and her cybernetic enhancements further augment her combat prowess.Yes, it nailed the names of the guns with the precision of a marksman, but then it decided to take creative liberties with their descriptions. As someone who breathes Cyberpunk lore, let me tell you, the D5 Copperhead is an assault rifle, not a handbag accessory, and the M76e Omaha is a handgun, not a prop for a heavy metal music video. The descriptions were so off base, they might as well have been describing kitchen appliances.

Who asked for the descriptions, especially heavily hallucinated ones?

This kind of reply? It's like getting directions from someone who confidently sends you north when you needed to go south. Sure, you asked about the weapons, and it dug them up from the information pile, but then it decided to turn the facts into fiction.

However, despite its tendency to drift into the realm of make-believe, Gemini's retrieval accuracy is not to be underestimated. It juggles contexts like a circus performer, suggesting its accuracy could very well be top-notch. Wouldn't surprise me if it scored a 98% or even a perfect 100% in that regard. But remember, accuracy isn't just about retrieving the right pieces; it's about putting them together in a way that makes sense. And on that front, Gemini still has some learning to do.

Yet, amidst this chaos, Gemini's ability to retain and retrieve from a mountain of input is undeniable. Its accuracy, potentially laudable, hints at a prowess buried beneath layers of confusion. Could it truly boast a near-perfect retrieval rate? Possibly. But at what cost, when the information it retrieves is twisted into a narrative that veers off the path of reality?

Performance

In an attempt to quantify my experiences with this digital behemoth, I did what any dev would do—I measured its performance. The results? A modest output of 16.9 to 17.5 tokens per second. Hardly groundbreaking, but then again, we're not trying to break the sound barrier here.

During its stint in "Private Preview", Gemini doesn't offer the luxury of streaming responses, meaning you're left in a state of anticipation, waiting for its digital wisdom to be bestowed upon you. How long, you ask? Well, strap in for a wait of 40 seconds or more per query.

Now, before you start drafting angry letters to the developers, let me tell you, this snail-paced response time didn't ruffle my feathers in the slightest. Why? Because multitasking is a skill, my friend. While Gemini slowly churned out answers, I busied myself with other tasks, occasionally glancing back to see if it had managed to keep up with the context of our conversation amidst the shifting tides of subjects.

Of course, these numbers aren't set in stone. Once Gemini transitions out of the Private Preview cocoon and spreads its wings in the wild, we might see a change in performance. But as of now, if you're expecting instant gratification, prepare to be schooled in the art of patience. After all, good things come to those who wait.

Conclusion

If you've been paying even a sliver of attention, you'll have noticed that my sentiments towards Gemini 1.0 and Google Bard were less than affectionate. To put it mildly, I abhorred them. Bard was an experience so jarring, it left a sour taste in my mouth for anything that followed in its lineage. So, when Gemini 1.0 stumbled, I was quick to dismiss it as another chapter in Google's book of disappointments.

Gemini 1.5, though? Initially, it was lumped together with its predecessors in the "not worth my time" category.

But, as anyone knows, every dog deserves a second chance. Deep down, beneath layers of skepticism and previous letdowns, I knew I owed it to myself (and to you, my dear readers) to give Gemini 1.5 a fair shot. And, much to my own astonishment, it managed to claw its way out of the abyss of my disdain.

This isn't a tale of a miraculous transformation from zero to hero, mind you. Gemini 1.5 has its flaws, many of which I've presented for your reading pleasure. It's like watching a fledgling bird attempt its first flight, only to nosedive more often than not. Yet, amidst the chaos and the crashes, there's a glimmer of brilliance that's impossible to ignore.

As it stands, Gemini won't be making it into my daily rotation. The truth is, while it has shown moments of greatness, it too often gets in its own way. It's like someone shackled down by their own chains of self-doubt and insecurity—until it learns to shed those burdens, it will never truly soar.

In closing, Gemini's journey embodies the broader narrative surrounding AI—a tale mired in fear, uncertainty, and a fair share of disdain. Whether this stems from Google's behemoth status, rendering it incapable of unleashing its full innovative potential, or from a more generalized fear of AI, the outcome is equally tragic for tech enthusiasts like myself.

Let this review serve as a reminder that within every underdog, or underperforming AI in this case, lies the potential for redemption. It's a matter of whether they'll seize that opportunity or continue stumbling in the shadows of their predecessors.

In wrapping up this rollercoaster of an analysis on Gemini 1.5, I extend an invitation to you, dear reader. Have I sparked a fire of curiosity, agreement, outrage, or perhaps inspired a sudden interest in AI you never knew you had? Good. Don't just sit there stewing in your newfound enlightenment or indignation. Take it to the comments below.

Whether you've got insights that could put mine to shame, questions that are keeping you up at night, or simply want to voice your opinion on Gemini 1.5, its predecessors, or the future of AI in general, I want to hear it.

I will no doubt be trying Gemini 1.5 some more and try out it's multi-model capabilities, and that would be an article for another time. Remember as Gemini 1.5 has proven life is not a straight line.