LoRA experiment 7: A basic look at Network Rank and Network Alpha settings

Tl;dr In my case the best settings I found so far to be are: Repeats 20, Network rank 86, Network Alpha 86, epoch 10.

At last, some progress! Be warned, though, this post might contain a few NSFW images. Buckle up – this one's going to be quite the ride. We'll be exploring the same topic throughout, so stay with me.

Using my previous post as a foundation, I made some minor adjustments. First, I axed an image, leaving us with a total of 35. Then, I set the repeats to 20 and let the training run for 10 epochs. My intuition told me to try a Network Rank of 86 and a Network Alpha of 86. I'll be documenting the final outcomes this time, with the training concluding at a loss of 0.0607. Let's call this version v1_21 (or 1.2.1) for reference.

Before hitting upon the idea of tweaking the Network Alpha setting, I was honestly baffled about what had gone wrong and what results I should have expected. But now, I've achieved a result that either matches or surpasses v1. I'm pretty relieved my intuition was spot-on about the Network Alpha playing a more significant role than I initially thought.

Admittedly, there was a considerable gap between my last training session and this one, as I struggled to visualize my end goal amidst the deteriorating results. During that time, I indulged in other interests and made progress elsewhere. LoRa training isn't my entire life, but it's nice to juggle multiple tasks. Sometimes, when you least expect it, you stumble upon a solution that reignites your enthusiasm.

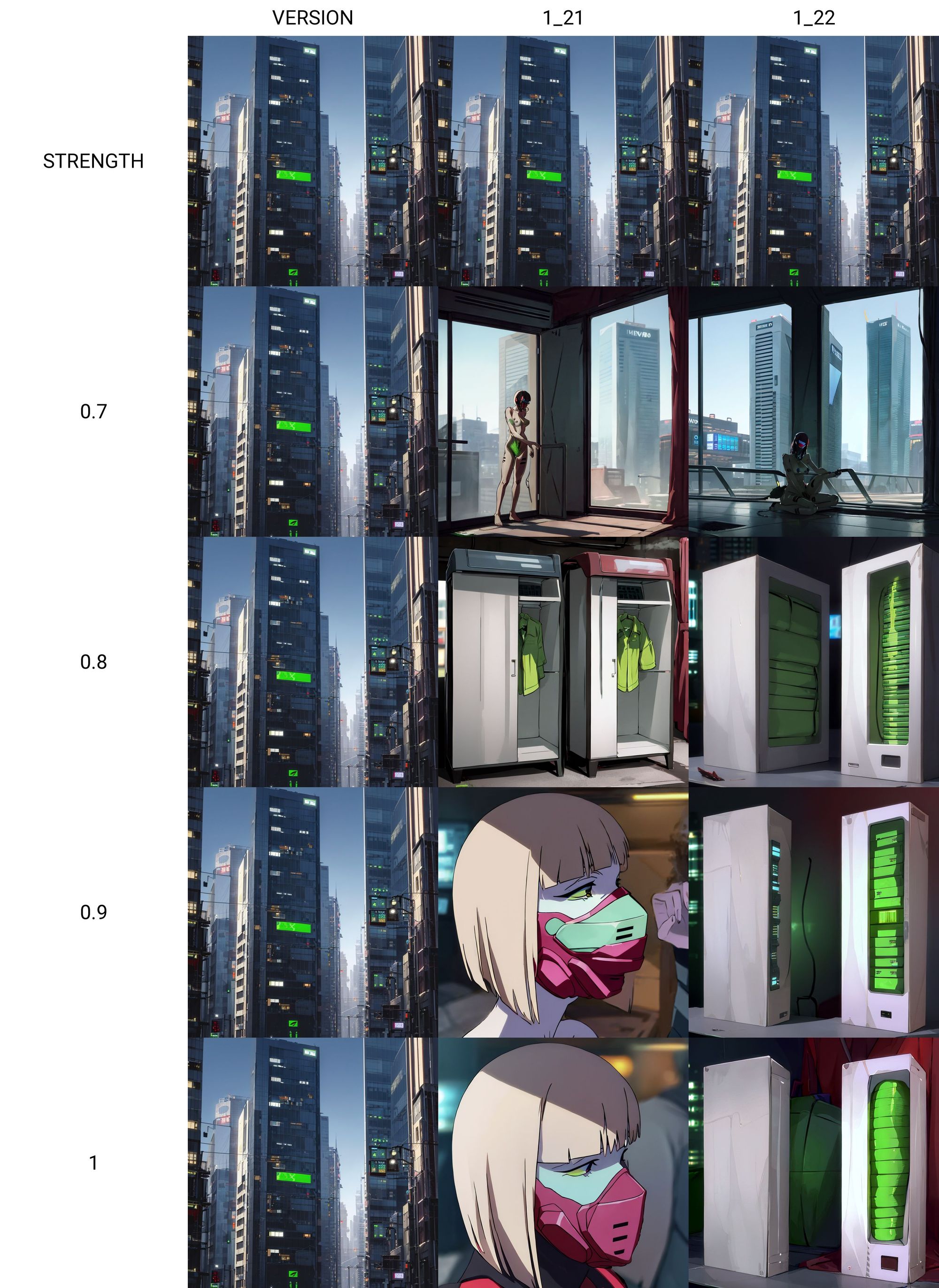

Now, let's do a quick recap of what the previous iteration generated.

As you can see, the results lean towards the generic side, with a few imperfections. It's not terrible, but there's definitely room for improvement. I must admit, though, I'm quite fond of the alternative color schemes.

Now, let's shift our focus to the creations of v1_21 (1.2.1 - 86 x 86).

Time for some insights. Even those with minimal attention to detail will notice this post's hero image was crafted using this version. It cleverly adapted to the prompt and even got creative with the mask color, matching it to the suit. My only gripe is that it lacks Kiwi's slender neck, meaning the image was likely based on a generic character as a starting point. But, it's close enough – I might even prefer it to the v1 version.

Regarding the picture with the dress, we can see that the AI knows where to apply the tattoo and conforms it accordingly. Impressive. The anatomy is on point, and the dress is a nice blend of her usual attire and a dress, I suppose. There's no artifacting or any weird glitches, and while the face quality could be better, that's an easy fix with inpainting. Overall, not much to criticize here.

I generated a few more images, and almost all came out great. The AI was consistent in respecting her tattoos and cyberware. However, I noticed a preference for generating nude images, which became a recurring theme in these sets. The lack of artifacting was a huge plus for me, but as we'll see later, the issue that bothered me might not have been artifacting but perhaps another cause.

Feeling inspired by these results and the lessons from my previous post in this series, I decided to test the waters by setting the Network Rank and Network Alpha both to 32, while keeping everything else the same. That's when I plunged deeper into the rabbit hole and ended up devising four additional variations to test my theories.

I generated some images, but was left unimpressed by all of them. I soon realized I had messed up a few settings, like forgetting to set the Clip Skip to 2, which forced me to re-train and disregard some of the results. Remember, Clip Skip should only be set to 2 when generating LoRA from one of the anime checkpoints. For Stable Diffusion checkpoints, Clip Skip 1 is just fine. This LoRA iteration used the version number v1_22 (1.2.2), and the training ended with a final loss of 0.0763. Let's delve into what happened.

Time for some analysis. I was quite displeased with the results of v1_22. Even with the character keyword (i.e., kiwi-cyberpunk) present, it wouldn't generate the character by default. I had to resort to helper prompts like "1girl" and so on. For the above images, I used additional prompts, but the outcome was a very generic character. It might retain some core elements, but overall, it reverts to creating generic anime girls – which could be due to undertraining or other factors. It's hard to pin down the exact issue at the moment.

However, this could be intriguing if you only want to use specific aspects of your LoRA and apply them to the base model. The small file size of the LoRA is a bonus, so while I wouldn't necessarily generate a character on this, clothing might work. It could be possible to use these results and apply the clothing to other characters.

While generating images based on v1_22, I noticed some didn't include the character at all, even with the character name in the prompt. I ended up comparing the outcomes from both LoRAs to see what would be generated for each prompt. When a character was generated, it still felt pretty generic.

So, I've learned that a measly Network Rank and Network Alpha of 32 just won't cut it for character LoRAs. Naturally, I had no choice but to go big – Network Rank 128 and Network Alpha 128, here we come. This little experiment has the version number v1_23 (1.2.3). I was so thrilled to see the results that, oops, I totally forgot to jot down the final training outcome before closing the window. Oh well, let's just see what sort of mess we've got this time.

Well, isn't this just delightful? The artifacting is back with a vengeance. I was starting to doubt myself, but it's definitely not overtraining. So now I'm suspicious that it might be the Network Alpha causing the artifacting. Or maybe it's some weird "style" – but comparing the original images to the generated ones, I can't say it's actually a style. I mean, I don't watch cyberpunk and think, "Gee, this is super artifacted." It's bizarre, and I can't pinpoint the cause.

I generated some close-up images, which should be this LoRA's specialty. It does create decent facial features, but there's this odd style that I just can't stand. Another observation: this version (v1_23) is more likely to produce an image of Kiwi. It seems the lower the Network Rank or Network Alpha, the less likely it is to generate an image of the character it's trained on. This raises an intriguing question: is the artifacting in my previous LoRA version – the ones that ran for 9 hours and were overdone – due to this setting?

Honestly, I'm not sure what's happening here, considering I only changed the Network Rank and Network Alpha. This is undeniably a downgrade from v1_21 (86x86). That said, v1_23 (128x128) stays truer to the original idea – we can see Kiwi's long, slender neck, which generic characters lack. The lines are sharper, and it captures her body type accurately. But ultimately, the results are still unsatisfactory.

Armed with the theory that the artifacting (or whatever that mess is) might be due to an excessively high Network Alpha setting, I put it to the test. I re-ran the training with a Network Rank of 128 and a Network Alpha of 86. This iteration is dubbed v1_24 (1.2.4), and it ended with a final loss of 0.0714. Now, let's examine the fruits of this effort.

Ah, déjà vu, anyone? The artifacting persists, and I'm far from impressed with these results. I didn't waste any more time on this iteration, but I will mention that the body anatomy remained intact, considering the images weren't cherry-picked.

Driven by curiosity, I decided to flip the settings – running the training with a Network Rank of 86 and a Network Alpha of 128. This new experiment is known as v1_25 (1.2.5), and it concluded with a final loss of 0.059.

Reflecting on 1.2.5, while it managed to eliminate the artifacting, it brought a whole new set of issues to the table. Aside from the face, which isn't any better than what 86x86 can generate, it completely butchered anatomy. Every image had some form of deformity – ludicrous arm lengths, weird overall body shapes, mismatched heads and bodies, you name it. And don't even get me started on the utter inability to draw legs, hands, or fingers – it was a disaster. So, yeah, the images shown for v1_25 were definitely cherry-picked. This iteration was undoubtedly one of the biggest failures.

However, this debacle sparked an idea. Since I now have a diverse array of version 1.2 training variations, I could generate the body, pose, and pretty much everything else with v1_23 (128x128), then pass the result through ControlNet and clean it up using version 1.2.1 (86x86). In theory, this would combine the best of both worlds – or perhaps allow me to mix and match with other versions.

Here is a reminder of the various v1.2 LoRAs

V1_2x - Network Rank x Network Alpha Final Loss

V1_21 - 86 x 86 Final loss 0.0607

V1_22 - 32 x 32 Final loss 0.0763

V1_23 - 128 x 128

V1_24 - 128 x 86 Final loss 0.0714

V1_25 - 86 x 128 Final loss 0.059

Having ventured deep into the rabbit hole, I circled back to v1_21 and combined it with different LoRAs to see what would happen. Overall, I'm quite impressed by the results. Out of all the variations, the 86x86 version is my favorite.

Though I didn't showcase them in this post, there was a bizarre influx of nude image generations. I still have no clue why that's happening. It's an intriguing side effect of the training. With my image set of 20 repeats and 35 images, the AI seems eager to render her without clothes, yet the tattoos are usually spot on. The issue now is that I never tagged her outfit, so I don't have a keyword to add it back. I did tag all the nude images in the training set, so it's strange that it's inverting that rule, but honestly, it doesn't bother me much. It can add clothes to the image when prompted, but this unexpected side effect is quite fascinating. I think I prefer the white list approach, but the problem is that once I alter the training settings again, this quirk would vanish.

Final Observations:

It seems like I could potentially train v1_21 more to achieve sharper lines and better character conformity. However, v1_23 (128x128) feels overdone already. Is a Network Rank of 128 just excessive, causing deformities if the Network Alpha isn't matched? Clearly, v1_22 is an outright failure, except for close-up images. But considering the majority of my training images are close-ups, does this mean it can only generate images based on what it's familiar with? Or was the training data too much for the 33MB LoRA? If it's a size issue, then the 130MB LoRA shouldn't be problematic, but evidently, they are.

In my specific case – a single character with 35 images – a Network Rank of 86 and Network Alpha of 86 appear to be the ideal "Goldilocks" settings. Moving forward, I'll try increasing the training epochs to see how additional training influences the results.

So, what's next?

I plan to experiment with combining multiple LoRAs and testing how well they perform on ControlNet. I've used it before and achieved some great results, but I'll play around with it some more – perhaps that could be an addendum to this, more of a spin-off than a mainline entry. There's also the possibility of continuing the training to 20 epochs and trying to reduce the artifacting in older trained LoRAs by combining them with more generic ones to generate something better. I don't have a clear plan yet, as I need to think about what I'm actually looking for in a final image generation. How am I internally scoring what I'm seeing? Honestly, there's no scientific measurement; it's all about my gut feelings.

I've pondered this quite a bit – since the majority of my images are close-ups of her face, I ran through all the LoRAs in this session again using the "close_up" tag. For the most part, none of them did a terrible job on the face, except for v1_25, which was a disaster. V1_24 was decent in a close-up scenario.

I've also considered my training images. Since I don't have any official high-res artwork, it's a bit tricky. Maybe I'll generate and tag a second image set, build it out, and compare the results. My feelings are mixed: if it was too easy, I'd lose interest, but because it's a challenge that keeps me on my toes, I can't help but want more – practical use case be damned. Plus, there's the constant thought of how to bring my results closer to my vision once I achieve them. Is the current tech sufficient? Maybe in the near future, we'll have better models or training techniques, and all this will be obsolete. But for now, the journey continues.