LoRA Experiment 8 - Making progress and overturning my past findings

tl;dr Two main points

- Do not upgrade your Nvidia drivers to Version 535! If you have then downgrade to version 531

- At least double your training's Network Alpha based on your Network Rank

Oh, isn't this a pleasant surprise? It appears that my previous findings have been thrown into the trash bin. I'd like to say it was me who found it but it was an observation I made while doing other things, so credit goes to whoever knew that. So I have to admit it but the settings I previously proposed are, in fact, not as impressive as I once thought.

Let me clarify before you jump to any hasty conclusions - the previous posts aren't necessarily wrong. They're just... inferior to the new information I've stumbled upon. In due time, I'll be amending them to reflect this.

This is a long article so I will split it up into

I know, I know, it's been an eternity since the last installment in this thrilling saga. To be frank, I'd run into a rather stubborn obstacle and couldn't find a way around it. So, like any sensible person, I decided to divert my attention elsewhere for a while.

A serendipitous discovery

I busied myself with various things, such as tampering with some open-source LLM's. (If you're curious about my thoughts on Falcon LLM, you can find my unfiltered opinion here). And then, like a bolt from the blue, something caught my eye.

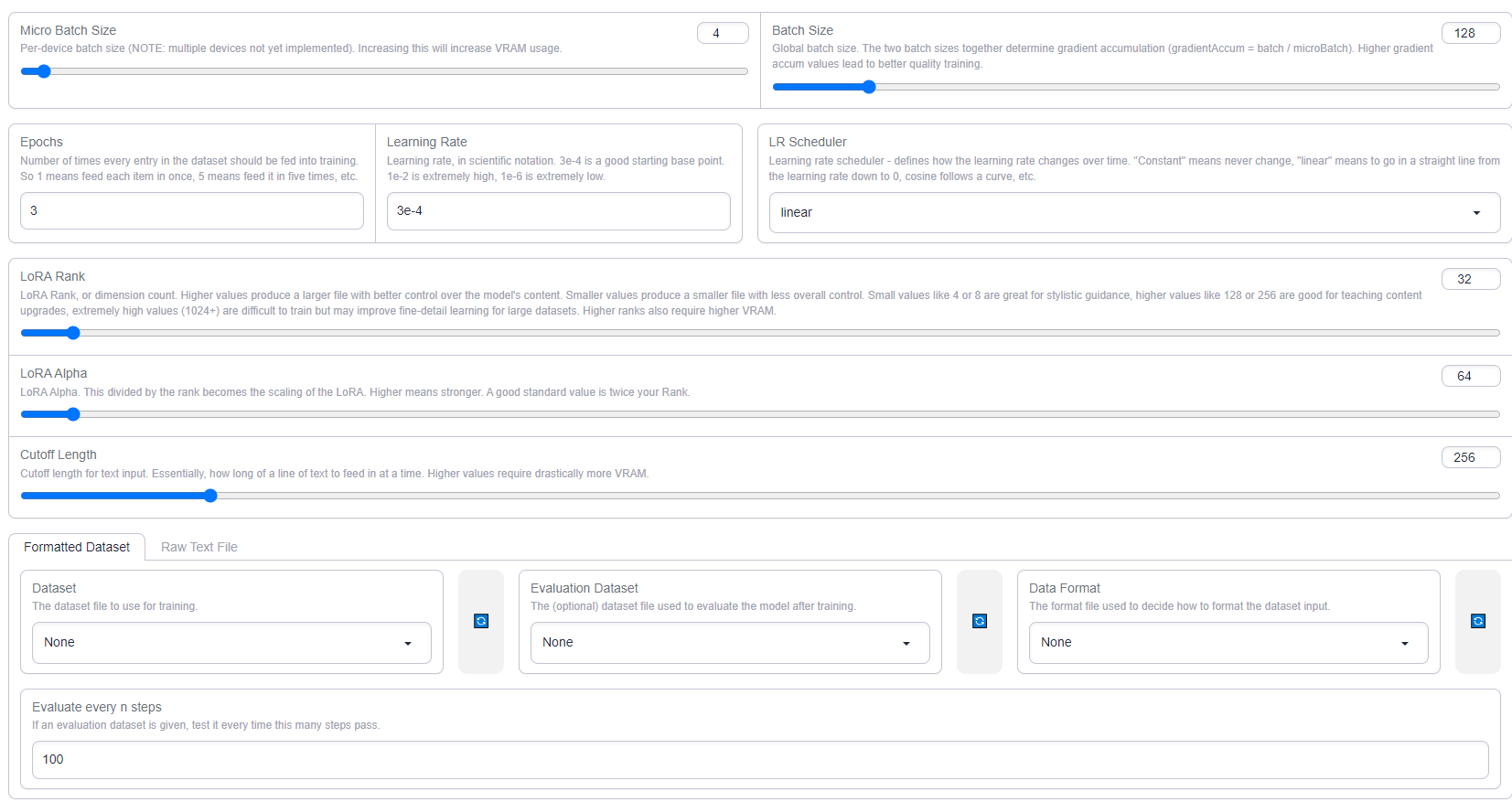

Fate or dumb luck, who knows? But there it was, glaring at me from the settings panel.

Just goes to show, everyone needs a little serendipity sometimes. In case I need emphasize what is written here, the LoRA training suggesting for training a LLM is to double your Network Alpha based on your Network Rank value. Double. Your. Network. Alpha.

Before we jump headlong into the results that the doubling of network alpha brings about, let's take a moment to pay our respects to my numerous blunders and wasted efforts. Yes, you heard me right. This journey hasn't been as straightforward as it may seem from the outside.

My failures and analysis

First off, let's revisit the "glorious" path I initially embarked upon. If you recall from the closing lines of my last article, I stupidly decided to redo my dataset. As if that wasn't enough of a hassle, I had this brilliant idea that my training images could use a bit of, well, 'beautification'.

Oh, what a colossal waste of time that turned out to be!

In case you're interested, here's a glimpse of the pathetic remnants of my failed endeavors.

Gawk all you want, but remember - it's the failures that lead us to success. I won't write off this new dataset entirely just yet as I can still tweak it a lot more and it might just yield some fruitful results. But first it's time to dissect this mess.

For starters, the results were nothing short of disastrous. Crucial details vanished into thin air. This happened because I decided to take my high-resolution screenshots and then resize them using the resize tools in StableDiffusion, thinking that smaller, crisper images might be the key.

I also went ahead and discarded a large number of images from the new set. I wanted to go with 'higher quality' instead of 'quantity' in this data set, even though you could hardly describe the previous data set 'quantity' or quality.

Every single image got a fresh labels, with 'cyki' serving as the new character tag. My revised image pool now boasted a grand total of 15 images. There are 2 LoRA's I've made from this image set. Cyki v0.5 I set the repeats to 15 and let the training run for 20 epochs. But what did I end up with? A massively undercooked LoRA, as you can see:

And just for reference, I ran it at 86 x 86 network rank and alpha, respectively. Cyki v0.6 used 30 repeats set to 30 epochs, it was more 'cooked' but I'd still consider it a failure.

The issues weren't limited to just 'dropping details'. The new versions also messed up the antonymy and had strange artifacting sometimes. The LoRA also outputs results which is more of a generic anime girl style, this might be due to undertraining or just due to a lack of variety in my images. Once again as with my other observations the close up images are always spot on, as the majority of my images are based on close up shots at various angles.

I spent 3 to 4 hours resizing and re-tagging everything, it was boring and did not pay off ...yet. In the end, the results were a massive letdown, not even remotely worth the effort. And that's when I stopped. The entire experience felt like a step backward. The quality of the images I used for input this time also varied greatly to me, but at least they have all become 'standized'. But as always, my instincts told me to train this as v0.5, and they've seldom led me astray.

Following this trainwreck of an experiment, I felt the pressing need to distance myself from StableDiffusion for a while. There was another task that I'd been conveniently avoiding - upgrading the versions of my libraries and plugins. I knew all too well that this wasn't going to be a walk in the park and braced myself for a day or two of relentless debugging.

In the beginning, things were surprisingly smooth sailing. I even managed to update my GPU drivers without causing a major disaster. Then I thought, why not take my new image set for a spin? So, I decided to set the repeats to 30 images and let the training run for 30 or 36 epochs.

Confident in my plan, I set the process in motion on a Sunday night, expecting to wake up to completed training. However, something odd caught my attention - the system was predicting over 5 hours for training. While it was perplexing, I brushed it off, thinking that it would finish by the time I woke up.

Imagine my surprise when I awoke to find that the training had crashed. Thrown off but not discouraged, I decided to use the epochs that had managed to complete and assess the quality of the output. But that's when I noticed we had hit a major snag. The system was taking an absurd 2 minutes to generate a single image!

Thinking it was an issue specific to the failed training, I decided to test my previous LoRA. And wouldn't you know, the same problem persisted. What used to take milliseconds was now taking over 8 seconds for a single iteration. We were now counting in seconds per iteration (s/it) rather than iterations per second (it/s).

In a fit of confusion, I double-checked my package versions and ensured Python was functioning properly. Left with no other choice, I turned to Google for answers. I stumbled upon a thread on a Github issue where someone had highlighted a potential driver issue. For details please see: https://github.com/vladmandic/automatic/discussions/1285

Skeptical but desperate, I checked the validity of their claim and found that they were, in fact, right. I promptly downgraded my drivers and rebooted the system. The speed loss was instantly reversed, much to my relief.

I planned to initiate training before going to sleep, but fatigue got the better of me, and I ended up dozing off without hitting the train button. A couple of days later, having almost forgotten about it, I eventually trained a new set.

New training sets

Now, with that out of the way, let's delve into the more successful parts, shall we?

So, after all this turmoil, I decided to go back to basics and prepare two new training sets based on my original image data and tagging. Thank the heavens I didn't delete it. Each set took less than 3 hours to prep. The first one peaked at 20 epochs, with a network rank of 86 and an alpha of 172. Let's see the outcome, shall we?

Immediately, I was taken aback by the striking improvement in the depiction of hands. Not only were they better, but the Kiwi's signature cigarette also made regular appearances, held delicately between her fingers! This felt like a significant stride forward, especially considering how I'd been struggling with the hand antonym and overtraining issues previously.

Feeling emboldened by these unexpected results, I pushed my luck and ran another training round, this time for 36 epochs, with a Network Rank of 86 x Network Alpha 258 (Triple the Alpha). My original plan was to push for 4x Network Alpha, but that would have meant cranking up the alpha to 344. Unsure if I could train that high, I decided to stick with 86 x 258.

Now, let's have a look at the results of this audacious experiment.

Inspired by the interesting outcomes, I decided to have a bit of fun and push the LoRA to its limits. While the results were less than groundbreaking, it did occasionally struggle. These images were generated on a CFG scale of 9.

But I want to draw your attention to a few intriguing details in this set. Take note of the patterns on her fingers. At first glance, it seemed like an error, as if the model was drawing outside the lines. But on revisiting the 'source material', I found that she indeed has these patterns. How the LoRA and model picked up this minute detail from just one or two images was mind-boggling. The marvels of LoRA training, am I right? Everything is unexpected.

Now, let's compare these outcomes to the 'winning' image from my previous post, which had a network rank and alpha of 86 x 86.

And, wow, what a difference! The earlier image looks positively mediocre in comparison - the anatomy is off, and it's decidedly washed out, and the clothing choice is a bit strange.

On another note, I also came across a LoRA trained and marked with the version v1.3 that I had forgotten to document. You can see the resulting image here

If I had to hazard a guess, I probably increased the epochs based on what I wrote in the previous post but looks like I gave on it pretty quick as it was a dead end.

After tinkering with it a bit more, though, I can't say I'm a fan. It's a shame that the process went undocumented, but the output has clear issues with the fingers. Not quite the result I was hoping for, to be honest. But I think I might have realized back then it was a dead end and just stopped with it as if I look through the images I generated with it I really did not generate much, the version number suggest it should have been for this post.

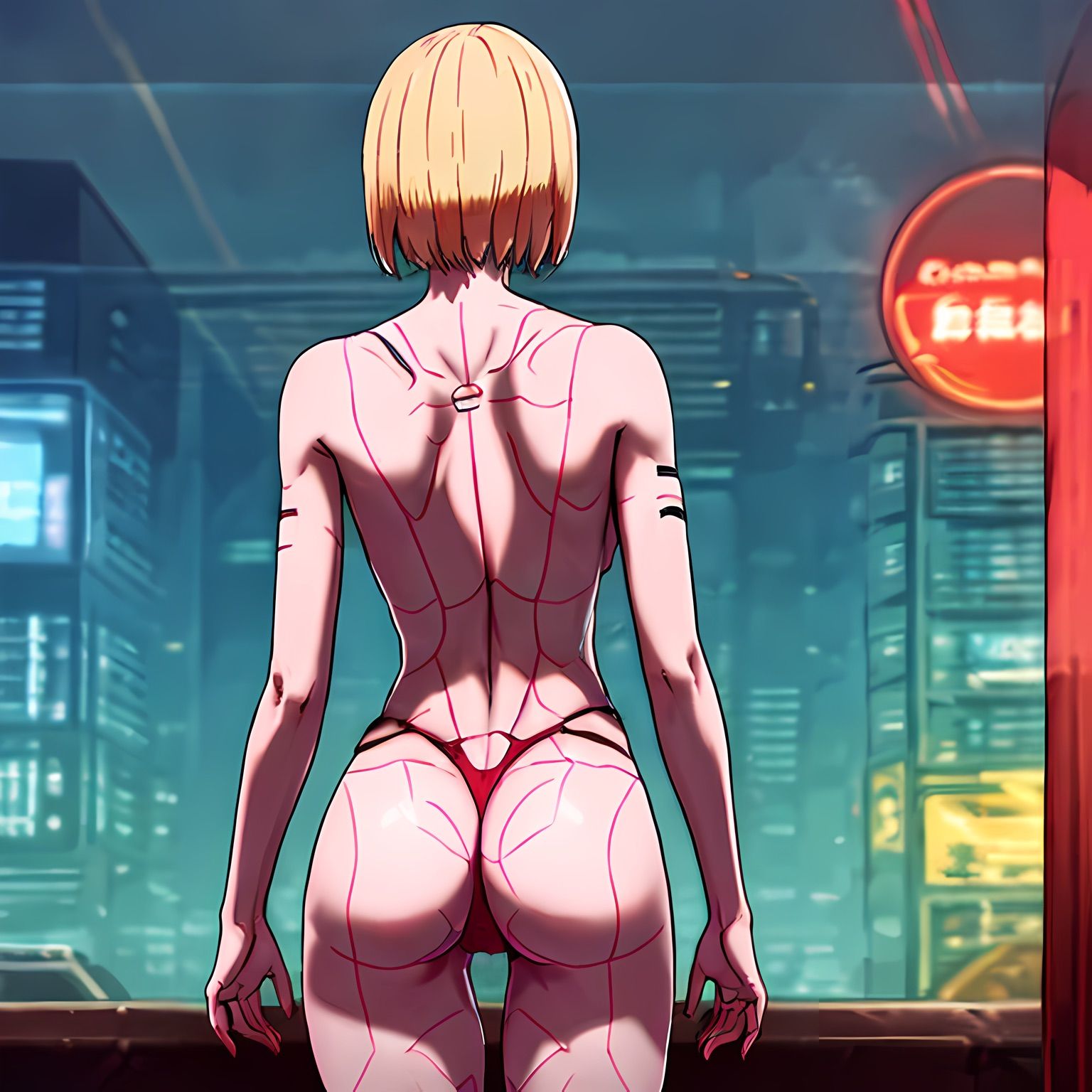

Now, take a moment to appreciate this stunning back image. This was generated with v1.31-2 - Network rank 86, Network Alpha 258. Sure, she doesn't have those tattoo patterns in reality, but to accurately train that would mean feeding my own images into the training, which has it's own set of issues. What's currently irking me, however, is the lack of vibrancy in the generated pictures. I believe I can coax more colour out of them, but that'll require another round of tinkering and testing.

On a positive note, the overall 'quality' has indeed improved. But is it just a consequence of the LoRA picking up on the 'style' of the input? This is something I need to avoid, as I'm after the features while leaving the style up to the model. What is this mysterious 'Network Alpha' setting. It seems it allows more freedom to both the LoRA and model, as we can see with changing the outfit or how the hands are being rendered. I think it's safe to say the LoRA has too little images to properly make hands so it must be using the hands from the model and the LoRA is just modifying that, instead of the other way around. However I don't want to say too much as I only have theories and speculation which I need to prove or disprove to myself.

Conclusion

So, where do we go from here?

Well, I intend to push the alpha to its limits – or at least until I'm satisfied. Next, I'll see if I can jazz up the washed-out colours, or tweak them somehow. Also, I'm keen to coax further increases in 'quality' until I'm happy with the results. Lastly, I want to revisit my second image set to see if I can polish that up to par, or better yet, outshine my initial data set. It's time to yet again try and make progress or improvements on something I don't know how to achieve... yet, but that's the challenge!